BBC FM Stereo - Digital under the surface...

Amongst audio/Hi-Fi enthusiasts in the UK, Stereo FM radio continues to be regards as superior in terms of sound quality to the relative newcomer, DAB. The BBC’s broadcasts - particularly on Radio 3 – have often been held up as an exemplar for high audio quality. And on more than one occasion I’ve read this being assigned to its ‘analogue’ nature. Even now, with excellent BBC iPlayer streams available, FM stereo holds a special place in terms of radio listening. However there are also occasional concerns which tend to be obscure and misunderstood. And some occasional threats from those in authority that FM transmissions might be ‘switched off’ because most people listen via other, explicitly digital, means. FM has survived for many decades, though, and because of that perhaps it is worthwhile to delve into the details to try and understand how it has managed to do so...

When you investigate this a number of factors become apparent which may surprise most audio fans. The first is perhaps the best known. That in practice for decades now the BBC FM broadcasts have not been an entirely analogue system. This is because the BBC adopted, and still employs the NICAM3 digital system their engineers developed and installed some decades ago. As a result, the question arises: Does the digital NICAM3 system limit or reduce the sound quality we hear?

In recent years Audio magazines have tended not to devote much space to reviewing Stereo FM tuners. Indeed, many manufacturers don’t even bother to design and sell them these days. Hence detailed information on the quality of FM tuners or the requirements for good reception tends to be sparse. You have to look back a few decades – e.g to the circa 1980 era of the ‘super tuners’ – to find many fully detailed reviews on various examples. And if you do, although the sheer range of measurements made is impressive, one vital snag isn’t obvious. This is that many of the bench test results obtained and published can actually be misleading! In effect, some tuners were made to perform well on a test bench, but in ways that may not represent their real performance when connected to a domestic FM antenna and trying to pick up a broadcast. This then raises questions about how to assess what actually tends to happen in real use!

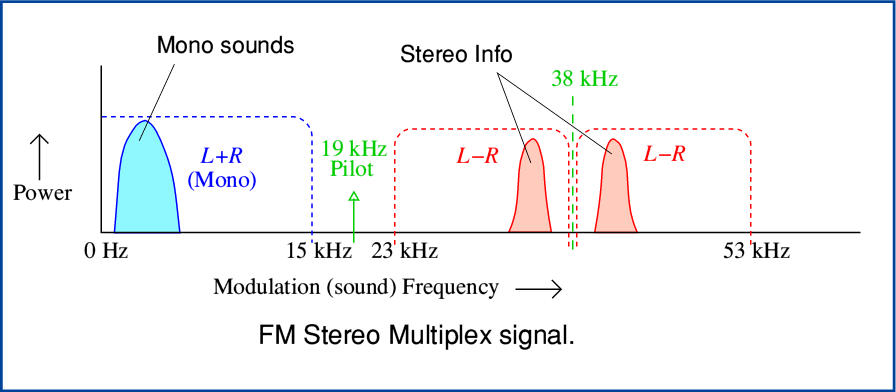

To set about answering some of these questions and deciding what actually sets the limits on sound quality we can start from considering the way in which FM stereo works. (Here I’ll use the UK situation, so note that things may differ slightly in other countries.) The diagram above illustrates how the system operates. Originally, FM broadcasting was in mono, and the transmitted RF ‘carrier’ frequency was modulated with audio components up to 15kHz. At neat trick was then used to carry stereo. This operates by using an ultrasonic ‘subcarrier’ at 38kHz which conveys the difference between the left (L) and right (R) audio channels. The original mono part now represents the sum of L+R. This method was adopted so that listeners could chose to go on using their old mono radios if they wished. They simply continue to detect the modulation up to 15kHz and play that ‘mono’ part of the sound. Whereas the newer-fangled stereo tuners and radios had the ability to detect and understand the ultrasonic L-R info and use that to reconstruct the stereo sound. However this method essentially squeezes a quart into a pint pot! This then has consequences for the performance.

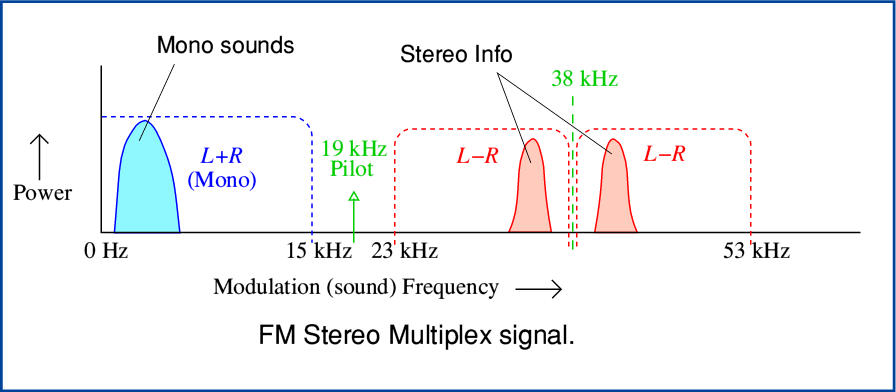

Looking at the old magazine reviews and manufacturer’s specification sheets the most obvious effect shows up in the way stereo reception requires a much stronger received signal level, and even given this, tends to output more background noise. The reason this happens stems from the way FM operates. If you look at the textbooks on FM radio you can find that the output noise from an FM decoder tends to have a specific sort of spectrum.

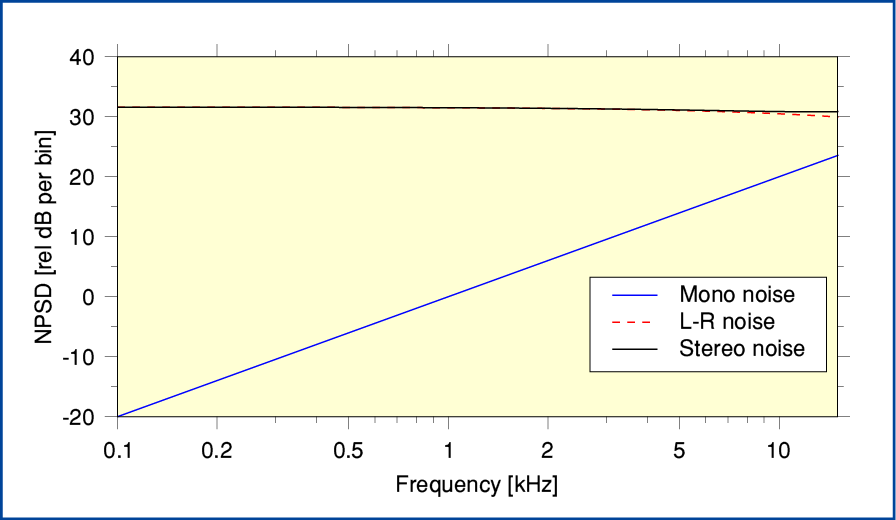

The above graph illustrates this behaviour. We find that when the input background noise to an FM demodulator has a flat spectrum (i.e. the noise is uniformly spread across the spectrum) the output noise has a spectrum showing the noise rising with audio frequency. For a mono receiver the result is that the part of this output noise up to 15kHz appears at the the output along with the wanted audio. The actual level of the output noise depends on the input FM signal/noise ratio presented to the FM demodulator. (Note that for the above graph and some of those which follow I have chosen to set the 0dB reference level as being the amount of noise output around 1kHz. This lets me simplify comparing mono with stereo, etc, in terms of their relative noise performance.)

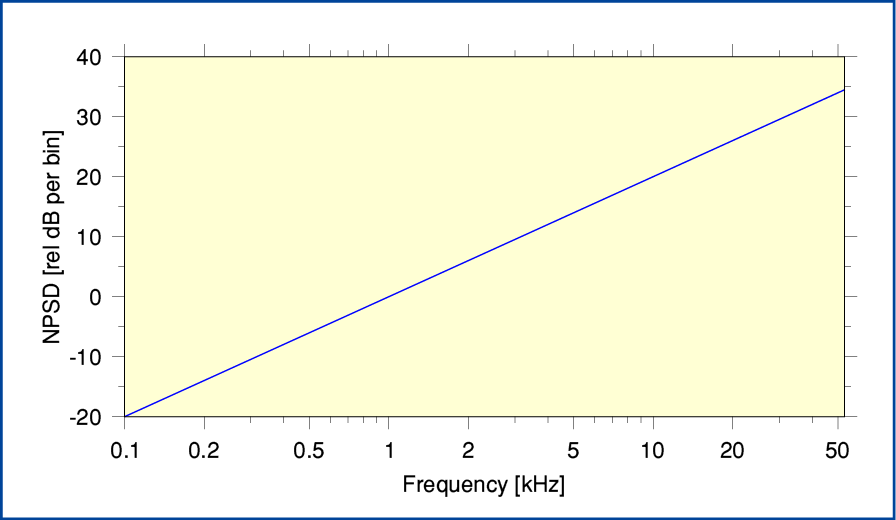

However stereo reception also uses the ultrasonic output from the receiver’s FM demodulator, as shown in the above graph. Here I’ve altered the horizontal scale be linear in order to make the consequences clearer. Because the noise level increases with frequency the L-R output is accompanied by considerably more noise than the mono (L+R) part. Hence all else being equal the level of audible background noise rises quite significantly when we listen to the output in stereo rather than mono.

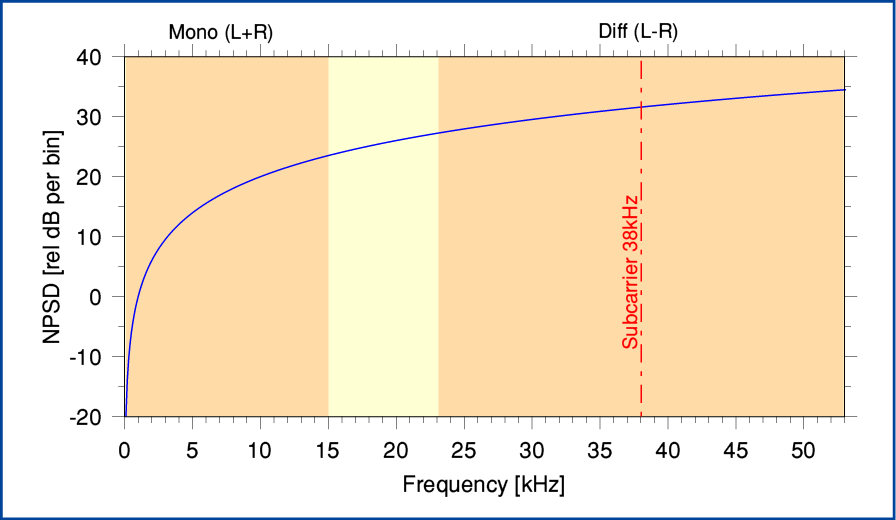

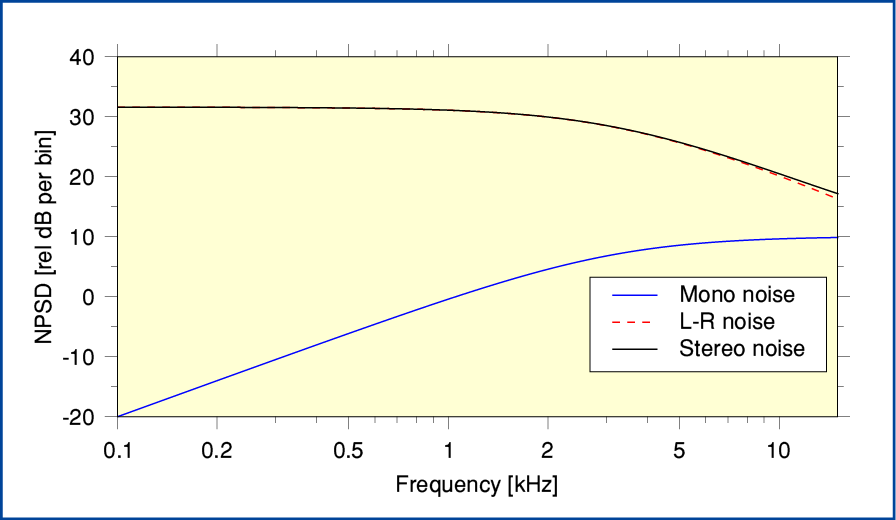

The above shows the effect on the audible output when the L-R noise spectrum is added into the stereo output audio. The overall noise level isn’t only much higher than for mono, it also has a different spectrum. In an ideal receiver we can expect the total output noise for a given received RF power to be around 12dB higher for stereo than it will be for mono reception. i.e. over ten times the noise!

In practice a technique called ‘de-emphasis’ is employed to improve the signal/noise ratio by boosting the higher audio modulation frequencies before transmission and then correcting this on reception. This changes the noise spectra emerging from the FM receiver as shown above. (I’ve used the UK ‘50 microsecond’ de-emphasis here.) Unfortunately although this does reduce the overall noise levels it actually makes the difference between mono and stereo even bigger and it becomes about 17dB for a perfect receiver!

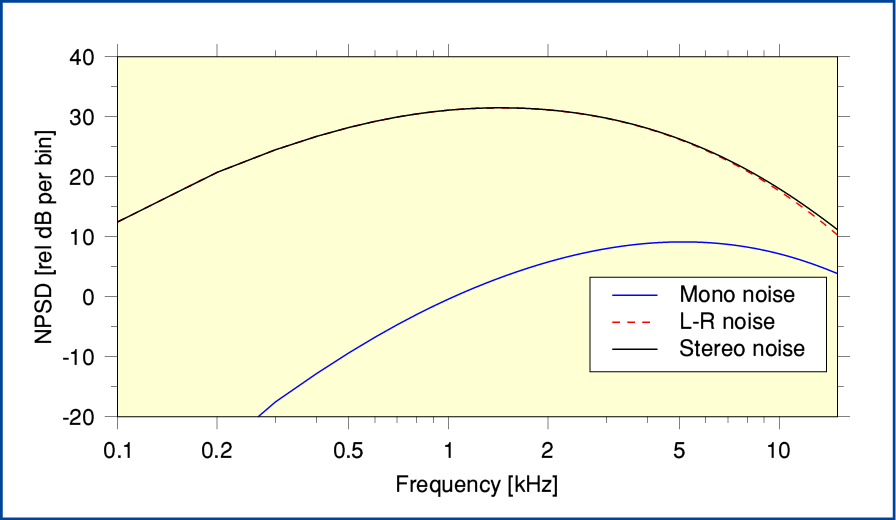

In general, magazine reviews and maker’s publicity material have tended to give noise measurement results to which a ‘weighting’ has been applied. This is nominally to try and take into account that noise at some frequencies is more audible than at others. For manufacturers this tends to give the convenient result of providing impressively lower noise values! Various weightings have been used over the years. The above graph illustrates the effect of ‘A weighting’ which was a common choice. It tends to have more effect on the mono noise because most of that is at high frequencies.

From the above we can summarise the following noise performance levels relative to unmodified mono output for a 15kHz audio bandwidth:

| Mono | Stereo | Mono - Stereo | |

| Unmodified demodulator output | 0·0 (ref. level) | 12·2 | 12·2 |

| With 50 microsecond de-emphasis | -10·0 | 7·1 | 17·1 |

| With de-emphasis and ‘A’ Weighting | -11·8 | 6·8 | 18·6 |

This helps to show why, in practice, an FM tuner needs to be able to receive a much higher signal power to provide good stereo reception than is required for mono! That said, in practice the actual difference between mono and stereo performance for real world tuners tends to be smaller than the above as a result of additional ‘internal’ contributions to the noise, or other circuit imperfections.

Typical magazine reviews in audio magazines would tend to include a graph showing how the output signal/noise ratio (SNR) varied with the input signal level. These generally showed how the noise level would drop as the input signal voltage was increased from a being a few μV up to around a mV or two. This was then used as a guide to what signal level would be required in use for the listener to obtain an acceptably low noise level. However there is actually a hidden assumption that might mean this was misleading.

The snag here is that these review measurements were done by connecting the tuner’s input directly to an RF signal generator via a short run of RF cable (usually co-ax). This means that the ‘background noise’ level presented to the tuner’s input was coming from either the bench-test RF signal generator or the amplifiers in the tuner. And this would then determine the input SNR which in turn helped to set the output audio noise levels. However in real use, the tuner would be connected to, and fed by, an antenna. This meant any noise from a test-bench signal generator would then, of course, be absent. But it also means that any background radio noise in the local environment could be picked up by the antenna and fed into the tuner! As a result, in real use the background noise level might be quite different to that presented to the tuner during a bench test! Hence in real use, the tuner might not perform as well as implied if you took the review results as being a reliable guide.

In addition, both the noise (N) and distortion (D) levels relative to the wanted audio signal (S) level will matter in normal use. It will be the combination (N+D) of these two compared using a ratio S/(N+D) which determines the level of imperfection in the output. And, unfortunately, there are also some problems lurking behind typical standard bench-test measurements of the distortion performance of an FM tuner...

If you look at the same old reviews and maker’s specifications you will tend to find measured/claimed distortion values typically in the range from 0·1% to around 0·3% depending on the tuner and choice of test waveform. As with noise, the stereo result tends to be higher than for mono. However if you look more closely you may find that quite often the ‘stereo’ measurement was actually produced simply by adding the 19kHz ‘pilot tone’ that tells the tuner that the input is ‘stereo’ and activates its stereo decoder. That then gives rise to the ‘stereo’ noise levels. But the chosen audio waveform used is still essentially a mono sinewave. i.e. the Left and Right channels are identical and thus there is no L-R ‘difference’ modulation up in the region around 38kHz!

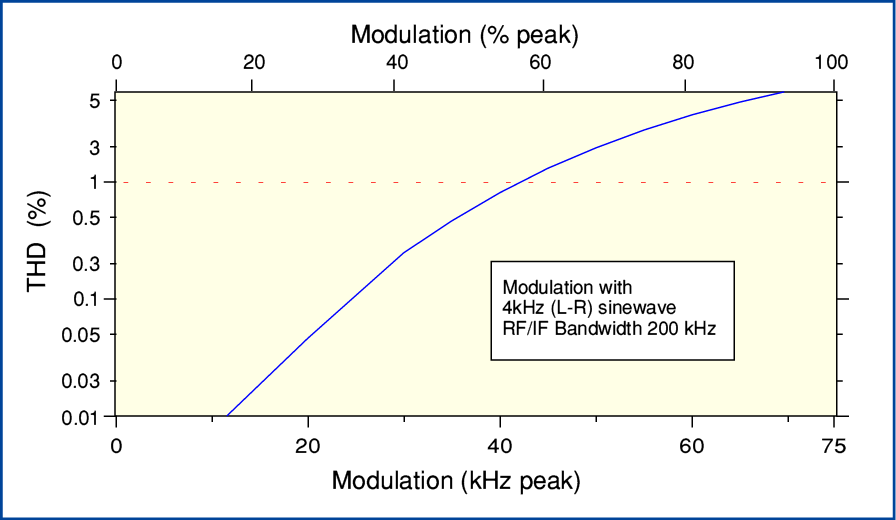

This matters because when the signal bandwidth is limited – as it always the case for a decent tuner – the amount of distortion increases with the choice of modulation frequency. I carried out a detailed investigation of this effect a few years ago. [See ref 1.]. However the following graph illustrates one of the key results.

This shows what can happen if a stereo sound generates out-of-phase components. This can actually easily happen for some sources which are near the center of a stereo image but aren’t exactly the same distance from two microphones! The result may be levels of distortion that are well above 1% despite much lower values being quoted in reviews. Here the test signal was a sinewave at 4kHz. Higher frequencies would produce even higher distortion levels.

The designer of an FM tuner can, of course, ‘tweak’ their design to have a wide bandwidth and as a result get lower distortion levels in a bench-test. But this will expose the tuner to being more prone to interference from other broadcasts, etc, when put into real use. And in reality even the transmitted signal being radiated will have a finite bandwidth. And, alas, this isn’t the only mechanism which can cause distortion...

Although it has tended to be neglected and forgotten, FM Stereo reception tends to suffer from a problem known as ‘Multipath distortion’. This occurs because objects like hills, buildings, and even trees tend to reflect some of the VHF signals that have been radiated by a transmitter. Some of these reflections may then reach the listener’s antenna and be fed to their FM tuner along with the ‘direct path’ signal. However because the reflected signal has travelled by an indirect route it arrives slightly later than the direct signal. Full analysis of the consequences is quite complicated, but the outcome tends to be an increase in the levels of distortion and sometimes also increased noise. And – as you might now guess – yes, the deterioration tends to be worse for stereo than mono because of the higher modulation frequencies involved to convey the L-R information.

This issue has been investigated in detail a number of times during the 20th century. (If you are interested a useful starting point is an article by Pat Hawker of the IBA in the April 1980 issue of Wireless World magazine.) However its impact on VHF Stereo FM has since then largely tended to be forgotten in the rush to switch broadcasting over to digital methods. And, of course, tends not to be considered when people simply connect a signal generator to an FM tuner for a magazine review or to produce specifications for the maker’s brochure! But what evidence we have makes it plausible that most FM Stereo listeners will be subject to it causing the levels of distortion to be higher than is indicated in any review measurements on their tuner!

As a result of the all the above factors it seems unlikely that most FM Stereo listeners will be getting an actual background noise+distortion (N+D) level of only around 0·1% (equivalent to -60dB) unless the audio modulation level is also quite low! Having reached this point we can now go on to consider the impact on noise and distortion performance of the NICAM3 system so we can then compare this with the actual FM Stereo ‘link’ from the transmitter to the listener’s loudspeakers.

NICAM Rulz?

A few years ago I wrote a webpage which describes the development of NICAM3 and outlined how it works.[See ref 2.] So here I’ll just summarise and use the relevant details as a basis for then considering the implications for FM Stereo performance.

The early NICAM3 encoders employed an ADC (Analog to Digital Convertor) to generate a stereo LPCM series of 14 bit LPCM samples at a 32k sample rate. These were then (for each channel) grouped into sets of 32 samples per channel covering 1ms of audio per group. They were then converted into 10 bit LPCM values using a scaling factor which depends on the amplitude of the largest value in that 32 sample group. These days, however, the BBC has standardised on internally distributing audio in the form of 24bit LPCM samples at a sample rate of 48k. Hence the conversion process nowdays will take in 48k/24 and output 32k/NICAM3 using a digital computation to obtain the results.

A set of five different scaling factors is provided, each corresponding to a change of × 2 in amplitude – i.e. a gain change of 6·02dB. So the scaling varies from unity for the loudest audio to 5 × 6·02 = 30·1 dB for the quietest sound levels. A consequence of this use of scaling is that the noise/distortion relative to 100% modulation introduced by the NICAM reduction to 10 bits per sample also scales with the signal level. i.e. For loud signals the noise/distortion added by NICAM also rises and falls with the audio level. The amount and type of noise/distortion generated by the NICAM3 process depends on if the conversion is dithered or not – and if dithered, on the choice of the form of dither. By consulting a standard reference like Watkinson’s Art of Digital Audio we can give the following values:

In the absence of any dithering the quantisation distortion level will be -62dB (approx 0·08%) relative to the maximum level of a NICAM3 block. If, we use dithering this value changes to -57·2dB (about 0·13%) if dither with a triangular probability distribution is employed. Note these values are relative to the scaled peak of the signal block’s range. Hence they represent the noise/distortion level for loud audio that reaches the maximum levels of the analogue range of the FM broadcast. But for lower ranges of the audio level the NICAM3 scaling means they are relative to lower reference levels – i.e. add less noise/distortion when the audio level itself is lower.

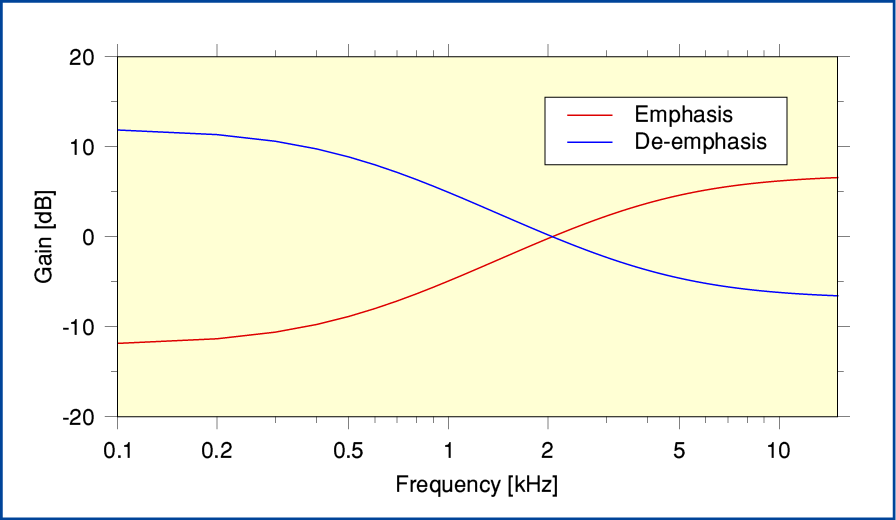

It is worth noting at this point that the BBC also employ a form of pre/de-emphasis to help improve the performance of the NICAM3 system. This is mentioned in, for example, the BBC Research Department paper BBC RD 1978/14 by McNalley which specifies the CCITT ‘J17’ emphasis. As with the use of pre/de-emphasis for Stereo FM broadcasts this has an effect on the resulting S/(N+D) performance of a NICAM3 link so we should now take this into account.

The above shows the frequency response curves for the J17 emphasis and de-emphasis. Note that the log scale in frequency may mislead. In reality a much wider range of frequencies is above the crossover (at about 2 kHz) than below it. The use of this emphasis essentially applies what communications and information engineers call ‘pre-whitening’. This allowed the BBC engineers to exploits the fact that, statistically, speech and music tends to have a power spectrum that falls with frequency because the quantisation noise/distortion of NICAM3 encoding tends, statistically, to be flat. At the time it was commented that with the optimum adjustment of the encoder-decoder gains the use of the J17 equalisation provided about “one bit’s worth” of improvement in the delivered SNR. However for caution we can perhaps assume a subjective improvement of more like 3dB when A weighted as it will depend on the spectrum and statistics of the quantisation noise/distortion.

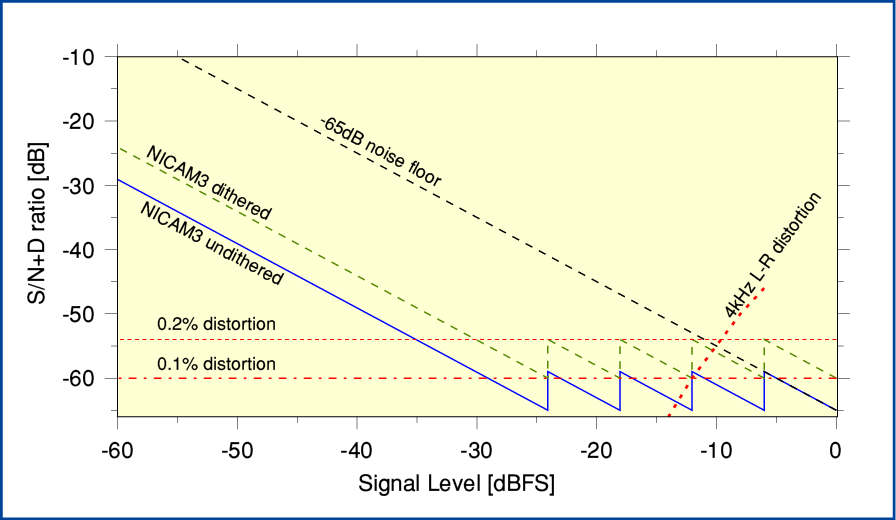

Having considered them individually in turn we can now combine and compare the various factors that tend to limit or degrade performance. The graph above plots various contributions onto the same graph. Two of the lines show the likely S/(N+D) contribution of the NICAM3 encoding when the effect of the prewhitening is taken into account. (Although the BBC comment implies these will actually be about 3dB lower than shown here.) The other lines show the level of effect upon S/(N+D) of the tuner’s noise floor and distortion levels. The 0·1% and 0·2% distortion lines serve as a reference but note that in reality the distortion performance is more likely to have a signal level dependence more like shown by the 4kHz L-R line when considering real stereo audio at high modulation levels. Overall, though we can see that the effect of NICAM3 tends to be below that of the noise and distortion added by the tuner, even ignoring environmental noise picked up by an antenna and the presence of some Multipath distortion. If we were to add some noise and distortion due to these real world problems, and also accept the BBC Engineers view of the effectiveness of the J17 prewhitening then it become clear that the effects of NICAM3 will generally be submerged by the real world ‘analogue’ factors.

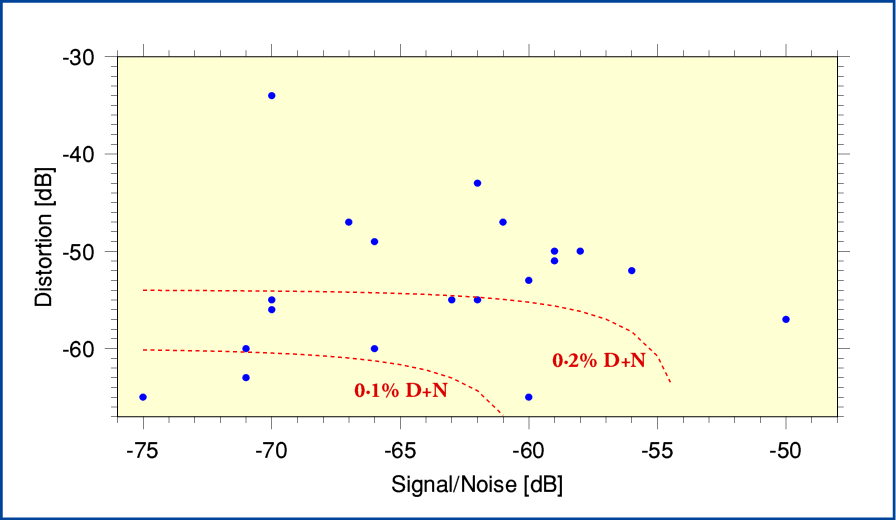

To add more context I have plotted the above graph which shows the measured performances of a couple of dozen good-quality tuners. The horizontal axis represents the measured S/N relative to 100% modulation when the pilot tone is present and the tuner is in ‘stereo’ mode. The vertical axis represents the measured distortion for each tuner when in ‘stereo’ mode.

The plotted values are based on a series of measurements made by the same reviewer using the same measurement setup, etc. A specific point to note here is the reviewer chose to use a ‘stereo’ 1kHz 100% modulation audio signal for the distortion measurements and measured THD (which might exclude any anharmonic distortion products). The result for each tuner is shown by a blue-filled circle. For reference I have added two broken red lines which show the limit of the regions where the combination of the tuner’s noise and distortion would be 0·1% and 0·2% relative to 100% modulation. It shows that almost all the tuners exceed the 0·1% percent level, and many are well above 0·2%.

A big snag when trying to interpret these results is, of course, that – as with many other reviews – the ‘stereo’ distortion was made whilst simply having the stereo decoder switched on by the presence of a pilot tone, and used an audio signal that was itself plain ‘mono’ L+R! Thus the measurements don’t cover the effects of any genuine ‘stereo’ L-R ultrasonic components in the modulation which tend to significantly increase the distortion level! Thus, despite being at 100% modulation they tend to underplay the real effect of genuine stereo modulation causing higher levels of distortion! And – as previously discussed – bench tests dodge any problems caused by real world background noise, interference, and multipath effects. So we can expect that in reality the actual background noise and distortion levels would be somewhat higher than represented by the above points obtained via a bench test.

Ideally, someone would have conducted a more realistic set of measurements on a number of tuners. But as it is, the evidence indicates we can expect that the BBC engineers were correct to decide that NICAM3 was – and still is – a satisfactory method for conveying stereo to their FM transmitters because in real use the limitations of receivers and FM reception will dominate over the limits of NICAM3. A conclusion that tends to be supported by the way so many audiophiles in the UK have continued to hold BBC R3 FM broadcasts in high regard and often assume it is in essence an ‘analogue’ system, not a ‘digital’ one!

[1] This webpage investigates the impact on FM of it having to use a finite bandwidth.

[2] This webpage outlines the history and operation of BBC NICAM.

Jim Lesurf

3600 Words

24th Mar 2020