Figure 1

| Figure 4 Shown to the left

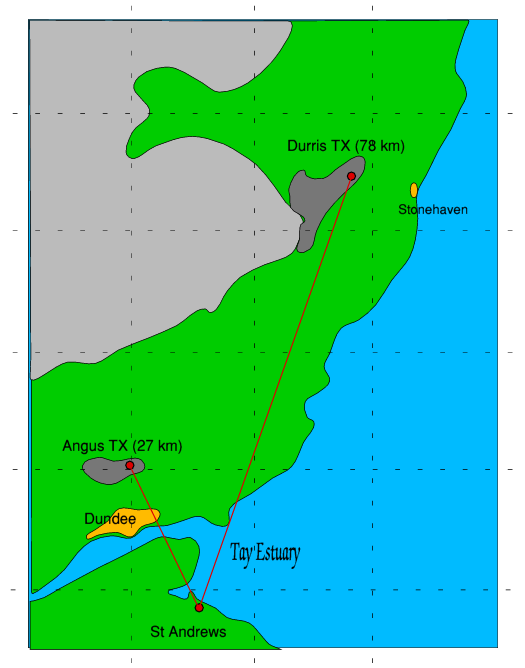

The map on the left shows where I live relative to the two closest DTTV transmitter sites. The nearest, Angus, is about 27 km away to the NNW. The best alternative I have to that is Durris, about 78 km away to the NNE. Durris is much further away, but the path is a relatively clear one. Although there is no local 4G coverage as yet, the closest existing mobile base station to my house is about 400 metres away, also to the NNW. From where I live the direction to this site is almost the same as that for the Angus DTTV site. So when I and my neighbours point our TV aerials at the Angus transmitter we’re also pointing them at this base station! |

|

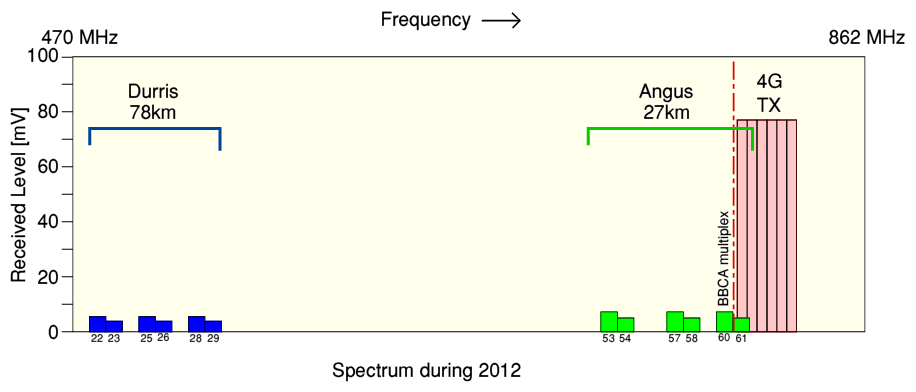

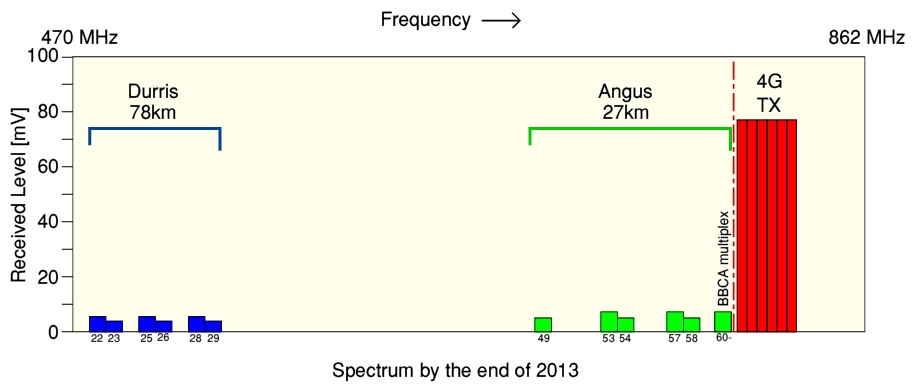

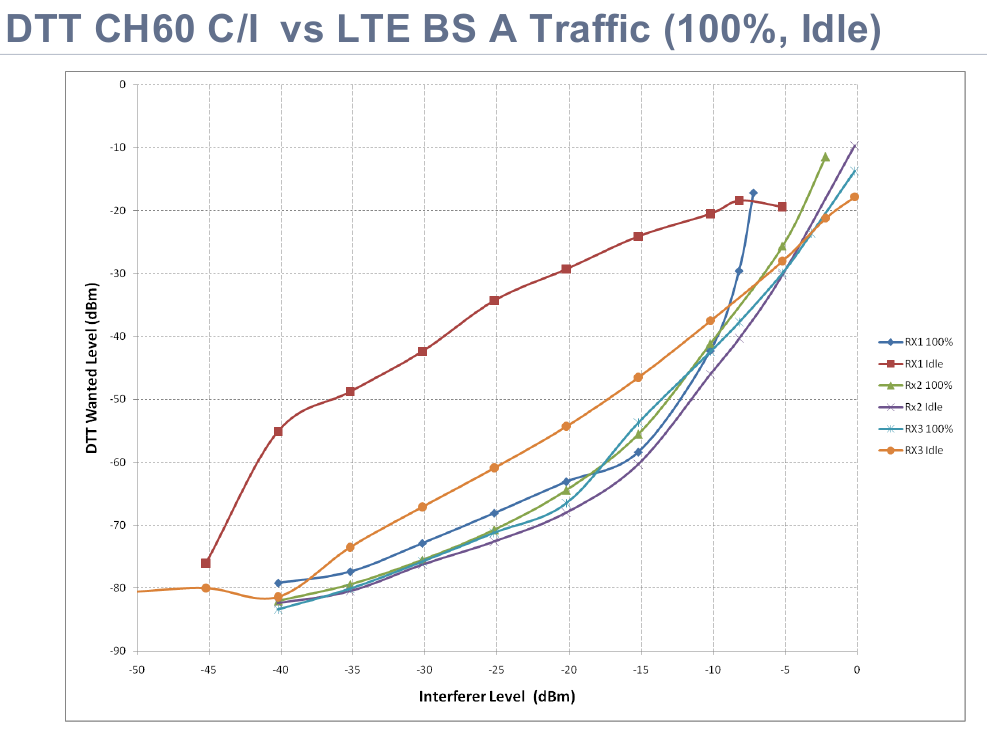

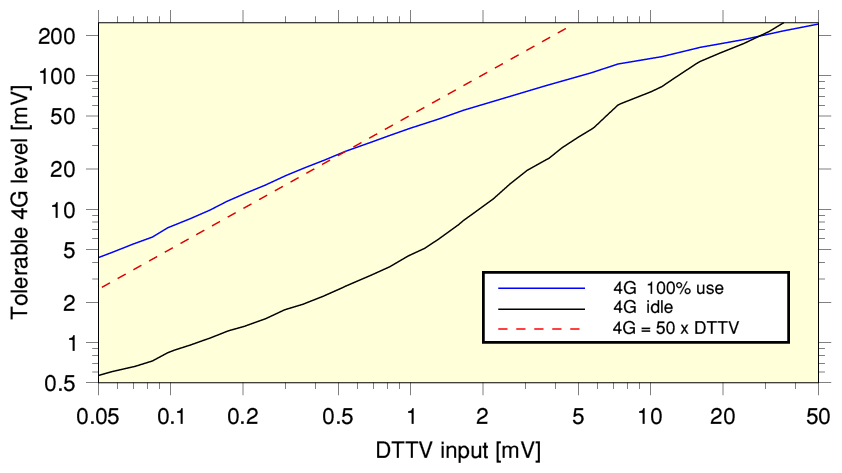

Facts from figures.

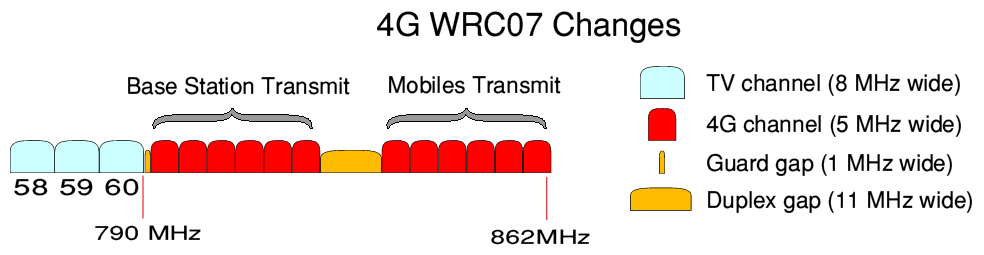

If you’re not familiar with units like ‘dB’ or terms like EIRP the following explanations may help. ‘dB’ (decibels) are regarded by engineers as a convenient way to indicate the relative levels of electrical signals like UHF transmissions. If one signal is shown as being 20 dB higher than another that means the power is 100 times bigger. 30 dB means the power is a 1000 times bigger. So a level of, say, -20dB is 30dB above one of -50dB – and is therefore 1000 times more powerful. The voltages change more slowly because the power grows in proportion with the square of the signal voltage. So 20dB means the voltage is just 10 times bigger. ‘dBm’ has a slightly different meaning. There the ‘m’ stands for ‘milli-Watt’ (i.e. one thousandth of a Watt). Because of that, a value like ‘60dBm’ means ‘1000000 times more powerful than a milliwatt – i.e. 1000 Watts or 1 kW (kilowatt). So a 4G base station transmitting an EIRP of around 60dBm/10MHz will behave like one radiating a 1000 Watts of Radio Frequency power in a 10 MHz ‘slot’ in the spectrum. In fact, the 4G power levels are rather higher than has in the past been allowed for earlier generations of mobile phone base station. The power needs to be high because 4G is planned to radiate much bigger amounts of information per second to provide all the fancy new 4G features! In engineering terms – higher data rates means higher transmitter power levels. Alas it can also mean stronger signals that cause more interference!... |

|

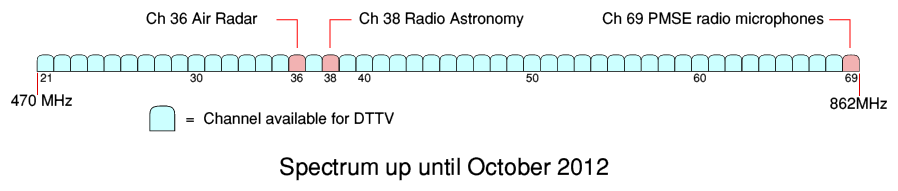

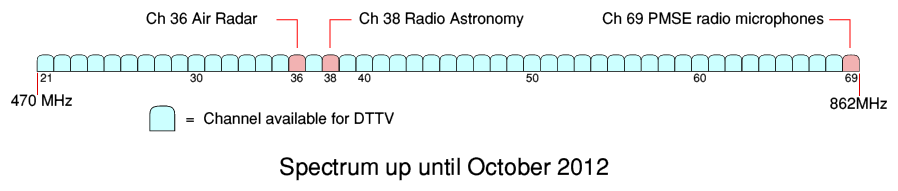

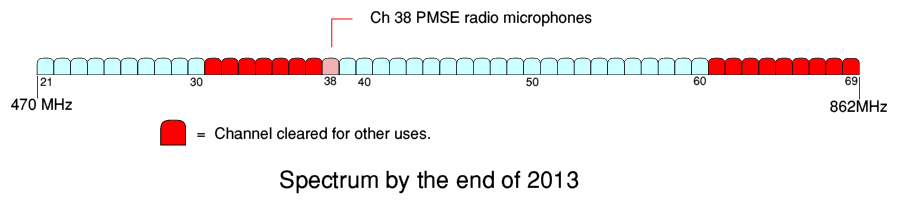

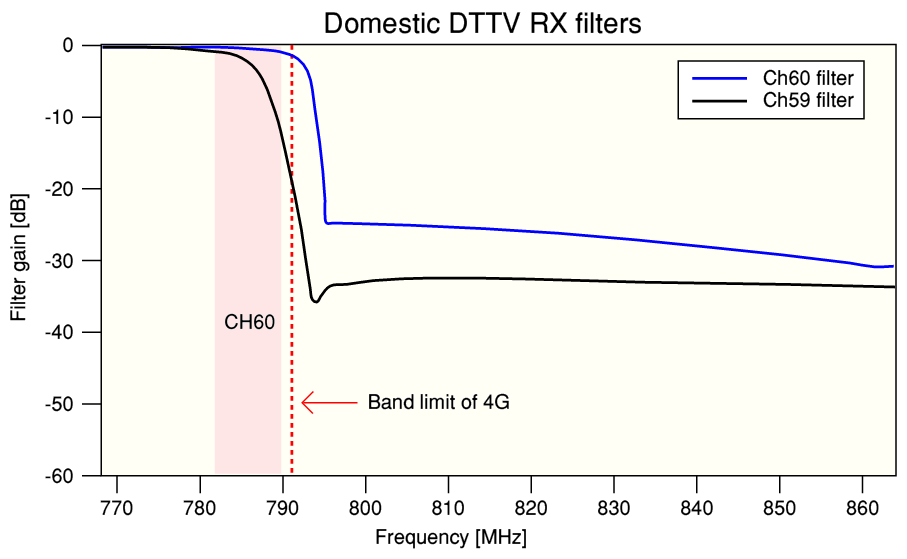

Changing Channel...

Back in the days when TV was ‘analogue’ each UHF channel carried just one TV station in a given area. So when changing from BBC1 to BBC2 people would say they were “changing channel”. We took for granted that ‘channel’ also referred to which TV station we wanted. But with digital TV we now get groups of TV stations, all bundled together so they can arrive in a single UHF channel. This gives us many more TV and sound radio stations. But, as a result, in many areas UHF ‘channel 60’ will actually be delivering BBC1 and BBC2 and a number of other BBC TV and radio ‘stations’. When you ‘tune’ your digital TV or recorder these days “changing channel” doesn’t mean what it used to in analogue TV days! So if the 4G interference blocks your ability to get UHF ch60 you will probably lose BBC1, BBC2, BBC3, BBC4, etc, along with the main BBC Radio 1, 2, 3, 4. etc. In other areas the block of TV/sound radio stations you’ll lose will be different. But note that UHF ‘ch60’ or ‘ch59’ doesn’t mean what you get when you press ‘60’ or ‘59’ on your TV remote control. It will probably means many TV and sound radio stations with various numbers in your TV guide! |